Solving An Old Problem With Our New Technology

By Graham Voysey

Recently, I noticed a disturbing trend: the sink in the common kitchen at our office was often left full of coffee cups, plates, and utensils. This couldn't remain the normal state of affairs. Our excellent office manager (Abby) was wasting time haranguing people on Slack and guests were getting a bad impression...this just wasn't tenable.

Thankfully, we're an AI company whose flagship product, Brain Builder, is a SaaS image classification tool that learns from very small data sets. We also had two engineers with a slightly weird sense of humor, a Raspberry Pi Zero W, and some Velcro hanging around.

The Plan

The plan was pretty simple. We'd tape a RasPi with a camera on it underneath the kitchen cupboards above the sink and orient it so it was hidden from view but had a good view of the sink.

Then, we'd write some hacky OpenCV functions to set a stable image threshold and then poll the camera every once in a while, to see if the threshold was wildly different. If it was, the sink was probably dirty!

We finagled a slack API key and wrote *sinkshamer*: a quick little bot that would select a random quip from a quotes file and then post that text and a photo of the sink every time it saw a dirty sink 3 times in 5 minutes.

What could _possibly_ go wrong? (Foreshadowing is the mark of a quality blog post.)

Sinkshamer Attempt One

As those of us who have tried know, writing well-behaved, logic-based computer vision algorithms is only really possible in tightly constrained applications. If you cannot guarantee the lighting, camera orientation, frame rate, exposure time, etc. of your run time environment with a high degree of precision, you should have no expectation that your code that works just fine in testing will work well when deployed in the real world.

In short order, we proved this point yet again. We had a really simple thresholding approach that failed completely in the first 90 seconds we ran the code.

Our threshold logic was skewed when people stood in front of the (clean) sink to talk or make tea or, you know, _be in the kitchen at all_.

Our false positive rate in the first 15 minutes of deployment, or first three decisions was 100%, which was enough to prompt our CTO Anatoli to yell at the bot on Slack.

I hastily disabled the slack posting feature. I was about to bring the bot down entirely when I realized that what I should do instead was leave it running, collect sink images on a timer, and build a data set of images of the sink and use our actual product to do all the hard work for me.

Sinkshamer Attempt Two

Over the course of the next day, I recorded about 150 images through the day and built a data set. Approximately 80% of it represents clean sink images. The dirty-sink images were varied, as expected. Coffee cups, espresso cups, plates, dishes, utensils, and food scraps all appear. Some items show up very often, like blue or green coffee mugs; some appear pretty rarely, like a single fork.

With that, I was ready to fire up Brain Builder and get training!

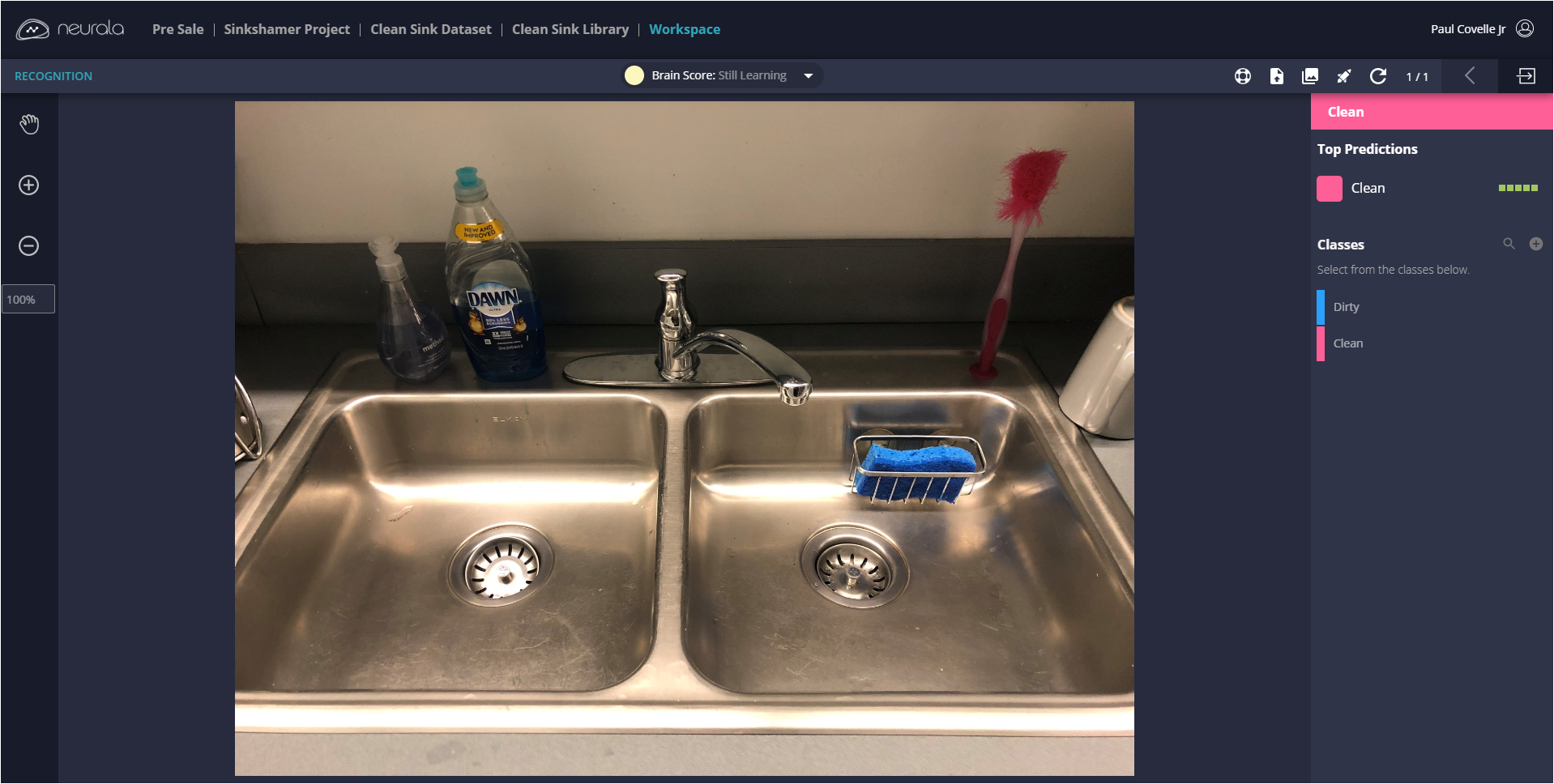

Building a sink-shaming classifier with Brain Builder

Requirements:

- Raspberry Pi Zero W, Camera, and Case ($45)

- Power Supply and 8 GB Mini SD Card ($10)

- Brain Builder Account (Free)

Data set upload

I logged into my Brain Builder account and uploaded the folder's worth of images of our sink.

Adding classes, tagging

I added a "clean" and "dirty" class, and then got on with tagging the images. After about 20 examples of each class, the brain was ready to go.

- Sample the sink. We took a 3-image burst spaced 45 seconds apart, every 5 minutes.

- Per burst, if the majority of the images were dirty and the last image was also a dirty image, count this poll as dirty.

- If dirty, post the image to slack with a randomly-selected flavor text about sinks or germs.

As a result, the main loop is simple, and the entire bot is 125 lines of code.

To this day, the Sinkshamer is still running and sadly, we rarely hear from our creation in the Slack channel. I would say mission accomplished. We now, for the most part, boast a clean office sink.