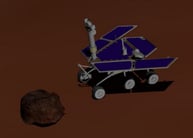

This video further illustrates how optic flow information can be extracted by a simulated Mars rover moving in a simulated Mars virtual. The work, sponsored by NASA and realized by Neurala (www.neurala.com) and the CELEST Neuromorphics Lab (nl.bu.edu), shows how the movement of a simulated robot with respect to its environment produces important clues in the visual system to estimate motion.

This information, often termed optic flow, allows a biological system to understand how itself and other objects are moving through the world.

This video further illustrates how optic flow information can be extracted by a simulated Mars rover moving in a simulated Mars virtual. The work, sponsored by NASA and realized by Neurala (www.neurala.com) and the CELEST Neuromorphics Lab (nl.bu.edu), shows how the movement of a simulated robot with respect to its environment produces important clues in the visual system to estimate motion.

This information, often termed optic flow, allows a biological system to understand how itself and other objects are moving through the world.

Neurala, the Boston University Neuromorphics Lab, and Mark Motter at NASA Langley are working together in a NASA-sponsored STTR to build neural models for autonomous navigation in a simulated Mars rover. The video below has been realized by Tim Barnes, Gennady Livitz, and Anatoli Gorchetchnikov.